I created two websites and deployed them on a free-tier AWS EC2 instance, they have been runing normally since this March; however, it is not available to access today.

Updates on 30th Sept.: This happens every two weeks or every month since the first time in this July.

Issues

-

I cannot visit my sites from my devices and other peoples', they response is timeout;

-

I cannot connect to the ec2 instance via ssh with my own perm as usual, and it says:

ssh: connect to host ec2-54-188-32-115.us-west-2.compute.amazonaws.com port 22: Connection timed out -

The EC2 IP address is not pingable.

Troubleshooting

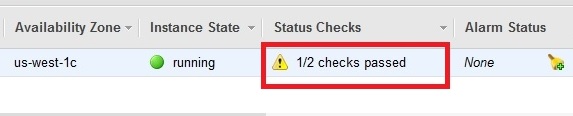

So I opened the EC2 web console and found that my instance is running, but there is a yellow warning sign from the dashboard as only "1/2 checks passed" of the Status Check.

The Status Checks failed at the second stage: Instance reachability check failed, and it failed 13 hours ago. The help info suggests a reboot to recover or create a new instance. The latter suggestion is a obviously awful solution as I will lost all the data and configuration.

The AWS kownledge center list many reasons or solutions for this issue, and I don't want to read that much before I've tried some easist way.

- First try: reboot. I reboot it from the console twice, however, the instance cannot be recovered, and the yellow warning still exists.

- Second try(Fix): Stop and start separately. This is not listed from their suggestion. I certainly don't want to create a new instance. So I stopped the instance from the console, and then update the console to start it after a while, start it when the instance has already been stopped. Fortunately, it run nomarlly and the "Instance Status Checks" passed! And notice that it will assign a new IPv4 address for your instance.

The whole process didn't take too long and I didn't figure out the reason why it got such a failure, I just tried the easiest and lowest cost way to recover.

I checked the log feature of the dashboard via right clicking the instance line and selecting: Instance Settings -> Get System Log, but the log system only keeps messages from the latest boot. Alternatively, I found many failures from the system log after I logined to the instance latter:

cat /var/log/syslog | grep "Jul 21" | more

Jul 21 11:13:20 ip-172-31-27-75 kernel: [10936316.101665] systemd[1]: snapd.service: Service hold-off time over, scheduling restart.

Jul 21 11:14:59 ip-172-31-27-75 kernel: [10936417.286155] systemd[1]: systemd-udevd.service: Service has no hold-off time, scheduling restart.

Jul 21 11:33:10 ip-172-31-27-75 kernel: [10937507.673740] systemd[1]: snapd.service: Start operation timed out. Terminating.

Jul 21 11:38:02 ip-172-31-27-75 kernel: [10937797.283597] systemd[1]: snapd.service: Failed with result 'timeout'.

Jul 21 11:39:04 ip-172-31-27-75 kernel: [10937861.647054] systemd[1]: snapd.service: Triggering OnFailure= dependencies.

Jul 21 11:44:33 ip-172-31-27-75 kernel: [10938189.983524] systemd[1]: systemd-udevd.service: Start operation timed out. Terminating.

Jul 21 11:46:03 ip-172-31-27-75 kernel: [10938280.521246] systemd[1]: systemd-udevd.service: Failed with result 'timeout'.

Jul 21 11:54:24 ip-172-31-27-75 kernel: [10938781.794783] systemd[1]: snapd.service: Service hold-off time over, scheduling restart.There were tons messages loop of snapd.service and sysemd-udevd.service failure, and the timestamp matched the time when the status check of "Instance reachability check failed". So I guess the status of snapd.service and systemd-udevd.service daemons might be important items when reachiability checking, failure of them will result in a "Instance reachability check failed".

Annoying SSH Login

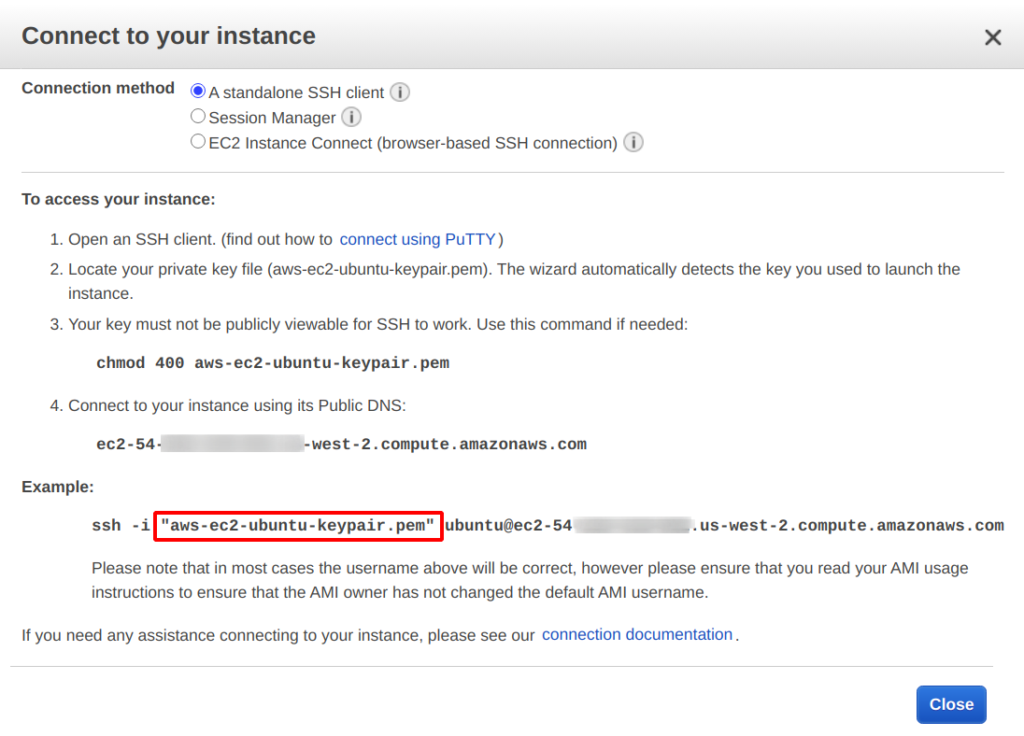

However, there was another problem when I used ssh to connect it. I typed the follwoing command(with TAB to auto-complete the .pem file):

ssh -i aws-ec2-ubuntu-keypair.pem ec2-54-181-117-241.us-west-2.compute.amazonaws.com Connection closed by 54.181.117.241 port 22

The response shows that it hits the port 22, but it closed it from the server side. It's so wired, I was wondering is there any problems with the safety group , NACLS, or my private key, however, these shouldn't be changed when we do a the instance stop and start operation. I checked the instance's safety groups, NACLs, and my local private key's timestamp, they were not changed.

It took me nearly 10 minutes before I found the solution. When I turned to the 'Connect' feature, began to do a web SSH connection from EC2's dashboard.

I found that the problem was that the key file string in command line should be quoted as the example shows.

August 3rd revised: With or without quotation is not the reason(in fact, both are right), the real reason is that I omit the account name

ubuntuto login. The generated AWS private key file (*.pem) should be matched with a user account name on the server.

ssh -i "aws-ec2-ubuntu-keypair.pem" ubuntu@ec2-54-181-117-241.us-west-2.compute.amazonaws.com

This is really annoying and frustrating because I use ssh -i identity-file destination quite often to login to Google Cloud instance, and it works all the time. And the ssh manual doesn't say that the identity-file should be quoted.

man ssh | grep "\-i" -A2 B4 ... -i identity_file Selects a file from which the identity (private key) for public key authentication is read. The default is ~/.ssh/id_dsa, ~/.ssh/id_ecdsa, ~/.ssh/id_ecdsa_sk, ~/.ssh/id_ed25519, ~/.ssh/id_ed25519_sk and ~/.ssh/id_rsa. Identity files may also be specified on a per-host basis in the configura‐ tion file. It is possible to have multiple -i options (and multiple identities specified in configuration files). If no certificates have been explicitly specified by the CertificateFile directive, ssh will also try to load certificate information from the filename obtained by appending -cert.pub to identity filenames. ...

However, I should remember that the .pem private key file should be quoted when using ssh -i option to login to a AWS EC2 instance.

August 3rd revised: Login to GCP with a key file is the same case.

- ssh to a server without a username in CLI is one form of ways to login with user/password authentication, the username/password can be typed when a connection is established to the server before login.

- If we use a private key to login, we must specify a username(which is assosicated with the public key on the server) before the hostname/ip.

Comments